This disclosure is published as part of Intellectual Frontiers Open Innovation Initiative. It is not a patent filing, but a technical blueprint shared to promote transparency, collaboration, and to prevent overlapping patent claims in this domain.

Abstract

The disclosed system provides an agentic AI-based enterprise platform that enables autonomous task execution across distributed enterprise systems using modular AI agents. The platform comprises a layered system architecture including an autonomous agent layer, task orchestration module, model selection engine, integration interfaces, logging infrastructure, and a workflow execution controller. Each AI agent operates as a stateless container with internal subsystems for task execution, contextual memory, AI model invocation, and output normalization. Workflows may be defined as directed acyclic graphs and dynamically instantiated by the task orchestration module based on enterprise-defined templates. In embodiments, the platform supports deployment in compliance-sensitive domains and enables scalable hybrid AI-human task execution strategies.

Introduction & Motivation

The embodiments described herein relate generally to artificial intelligence (AI) systems and, more particularly, to a configurable service platform for orchestrating autonomous AI agents for enterprise workflow automation.

In recent years, enterprises have increasingly turned to automation tools and intelligent systems to manage complex and high-volume business operations. Traditional approaches to business process automation have relied on rule-based engines, robotic process automation (RPA), or hardcoded logic, often leading to brittle systems that require extensive manual configuration and intervention. While cloud-based platforms and large language models (LLMs) have emerged to augment productivity, they are generally limited to single-turn tasks or static prompts, and lack orchestration capabilities across domains.

Furthermore, existing solutions are often not equipped to support dynamic coordination between independently executing agents, nor do they incorporate robust mechanisms for real-time context propagation, adaptive model selection, or compliance-centric auditability. There exists a need for a unified, modular system that can instantiate, manage, and coordinate behavior of the AI agents across a diverse array of enterprise tasks while supporting interoperability, fault-tolerance, and human oversight.

Summary

The embodiments herein provide a modular and scalable agentic AI-based platform configured for autonomous execution of enterprise workflows. The platform includes an autonomous agent layer comprising a plurality of stateless software agents, each provisioned with an internal architecture including a task execution engine, in-memory contextual state manager, AI model invocation interface, and input-output normalization module.

A task orchestration module is configured to retrieve machine-readable workflow templates, instantiate agents per task node, and manage task state transitions through a finite state machine. The platform may further comprise a model selection engine that dynamically chooses AI models from a model repository based on semantic classification, resource constraints, and performance criteria. The model repository maintains versioned references to general-purpose, multimodal, or domain-specific AI models with failover capabilities.

The platform includes an integration layer supporting bidirectional data exchange with external enterprise systems via standardized protocols, and an API gateway interface for workflow ingress and multi-tenant security. A logging and traceability module captures runtime telemetry and error events, while a workflow execution controller manages agent health, task reallocation, and execution policy compliance.

In some embodiments, the platform incorporates domain-specific agent templates and protocol-based interoperability layers such as agent-to-agent (A2A) communication frameworks. These facilitate dynamic agent collaboration across vendor boundaries and distributed computing environments.

Additional embodiments describe runtime checkpoints, human-in-the-loop controls, and compliance-focused safeguards such as traceable decision logs, contextual fallback strategies, and execution policy enforcement. The disclosed system thereby enables cognitive enterprise transformation by extending human capabilities through orchestrated AI agents in a modular, auditable, and resilient infrastructure.

Description

The features of the disclosed embodiments may become apparent from the following detailed description taken in conjunction with the accompanying drawings showing illustrative embodiments herein, in which:

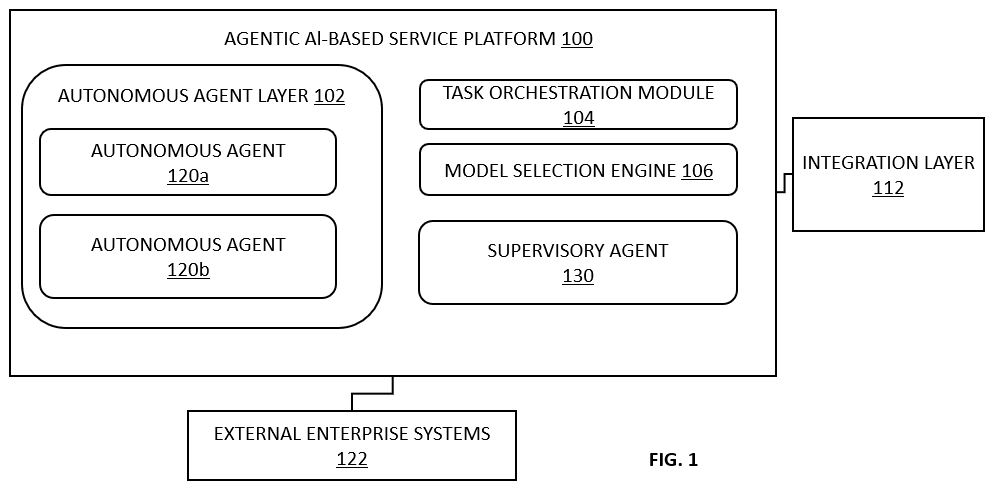

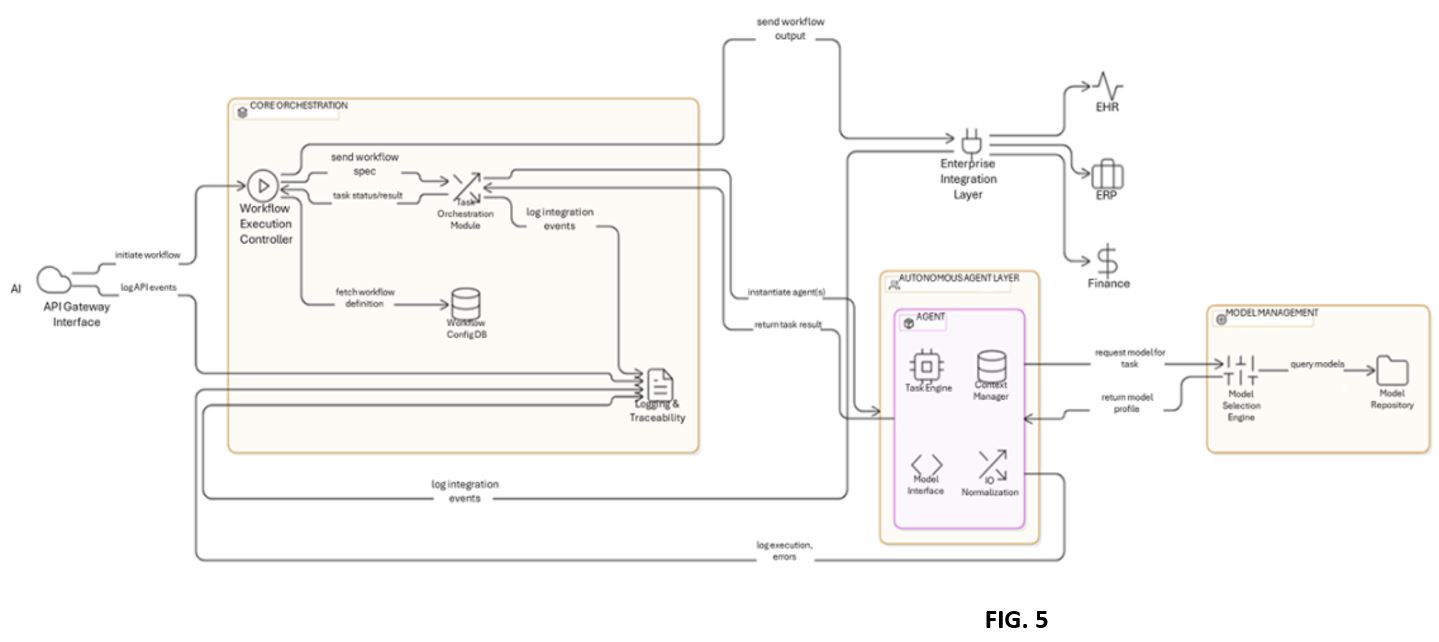

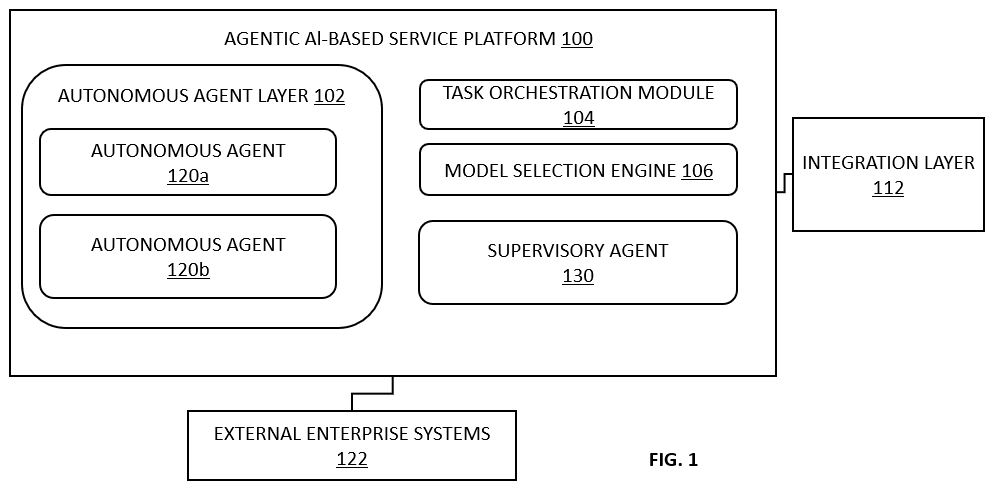

FIG. 1 illustrates a high-level system architecture of an agentic AI-based service platform configured for autonomous enterprise workflow execution across multiple domains, in various embodiments.

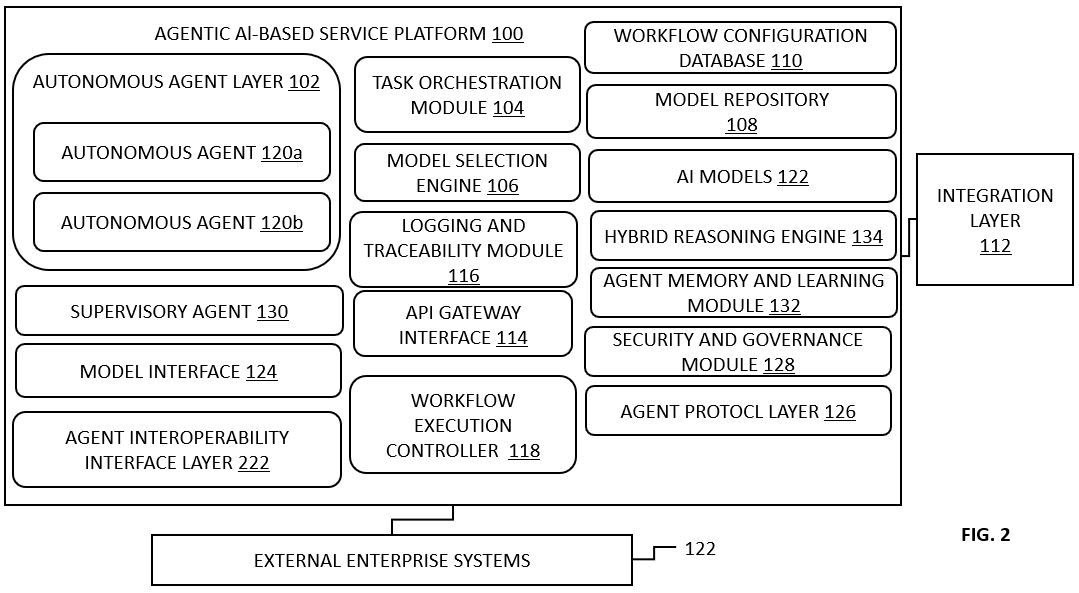

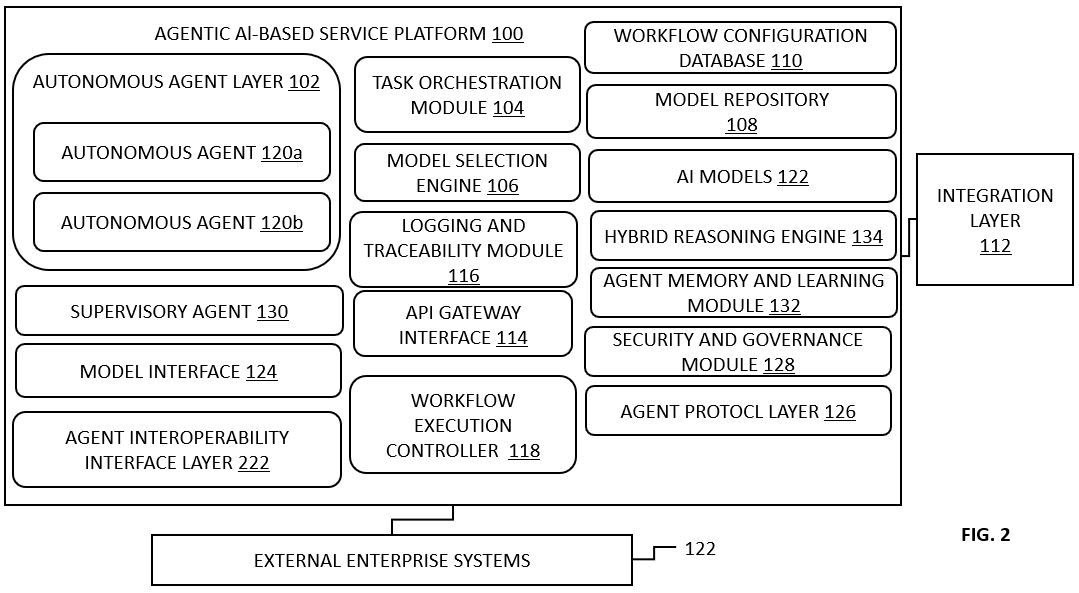

FIG. 2 illustrates a system architecture of the agentic AI-based service platform configured for autonomous enterprise workflow execution, in some embodiments.

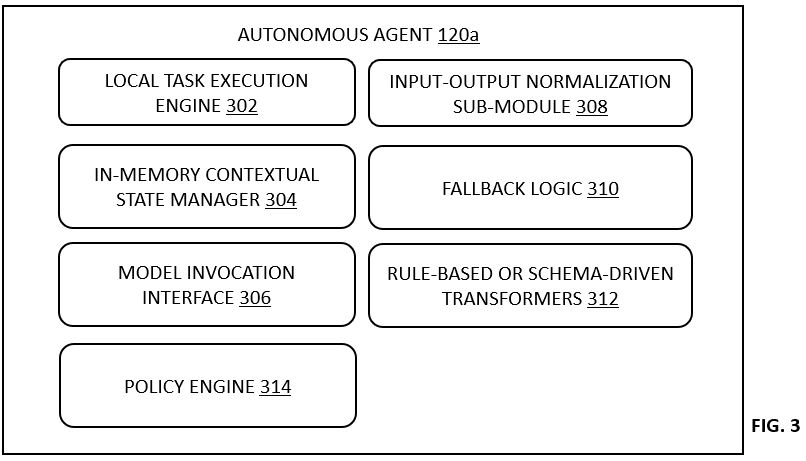

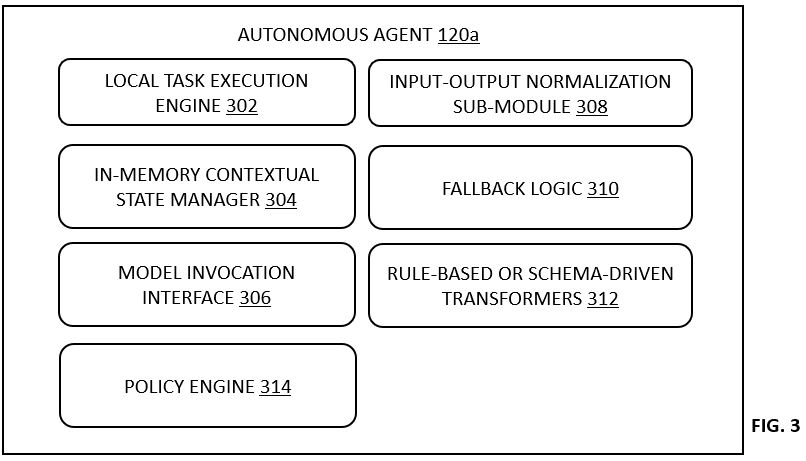

FIG. 3 illustrates an internal architecture of an exemplary autonomous agent, including sub-modules for local task execution, model invocation, context management, and fallback handling, in various embodiments.

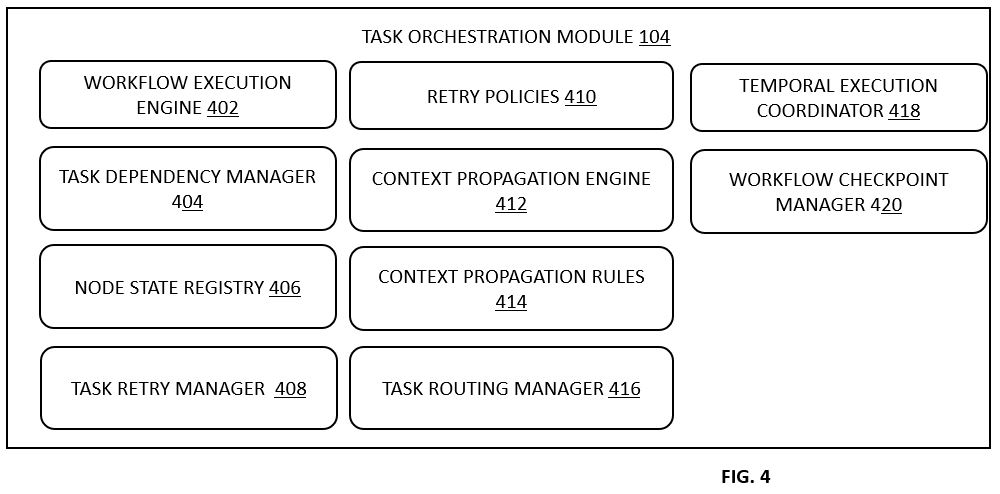

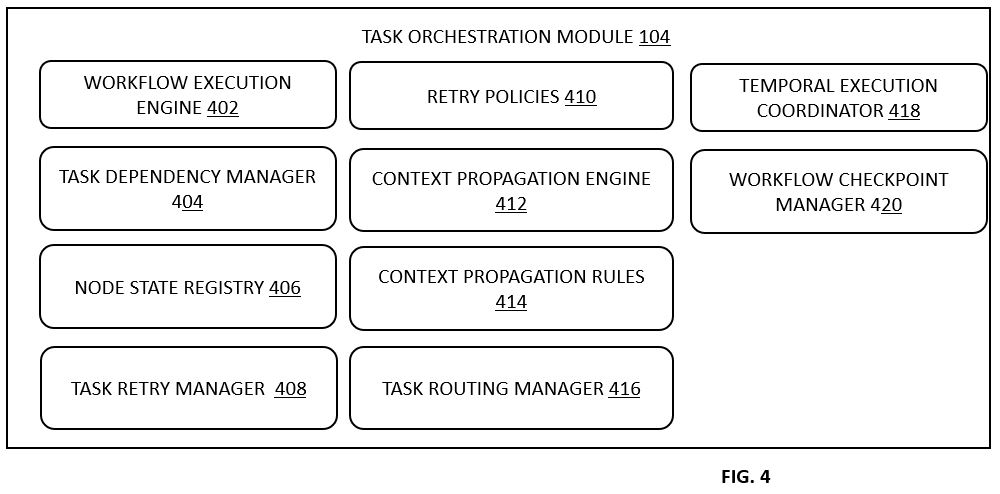

FIG. 4 illustrates internal components of a task orchestration module, including subcomponents for workflow execution, dependency management, context propagation, and task retry logic, in various embodiments.

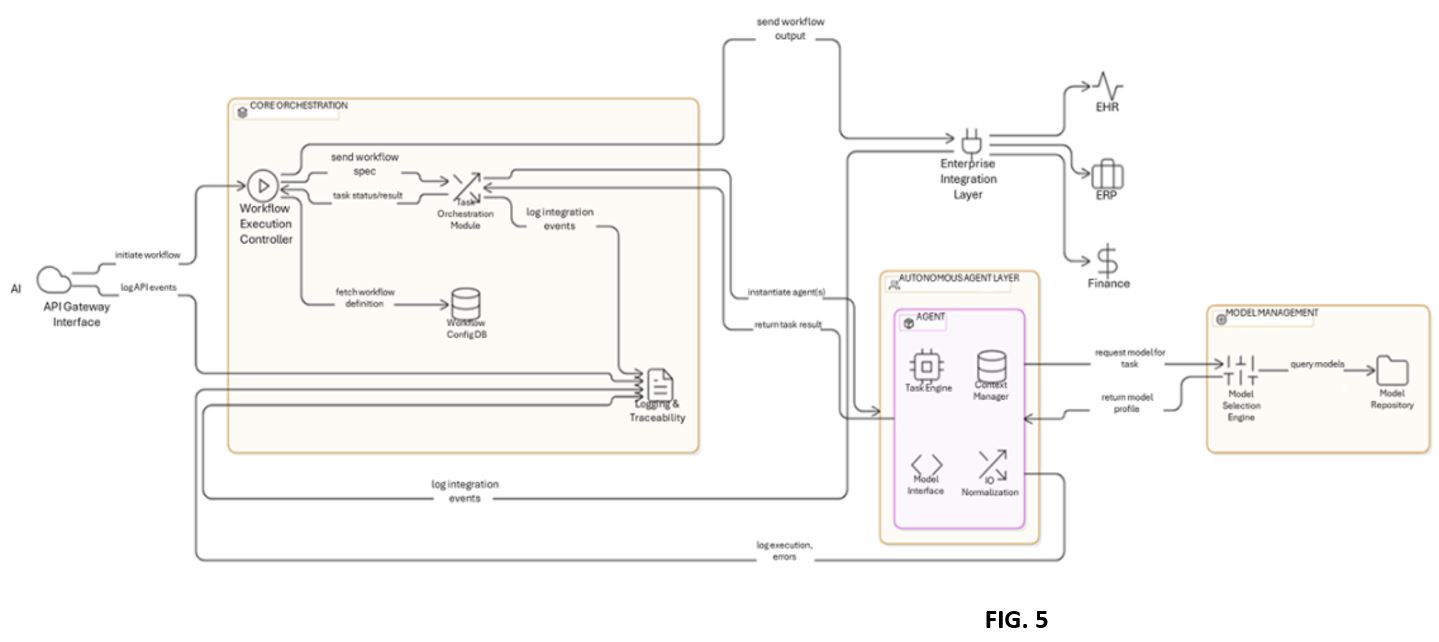

FIG. 5 illustrates a data flow diagram showing coordination among system components during runtime execution, including interaction with external enterprise systems, in various embodiments.

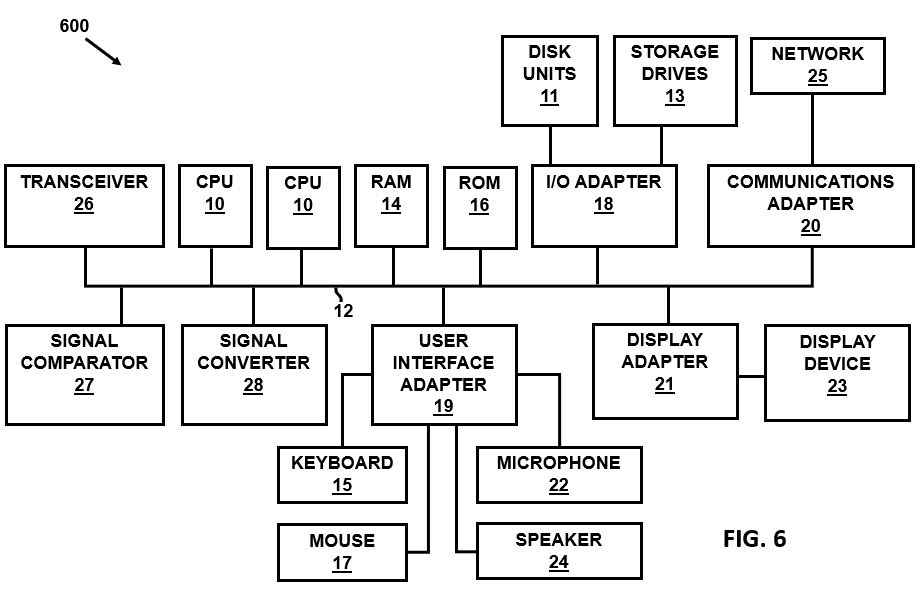

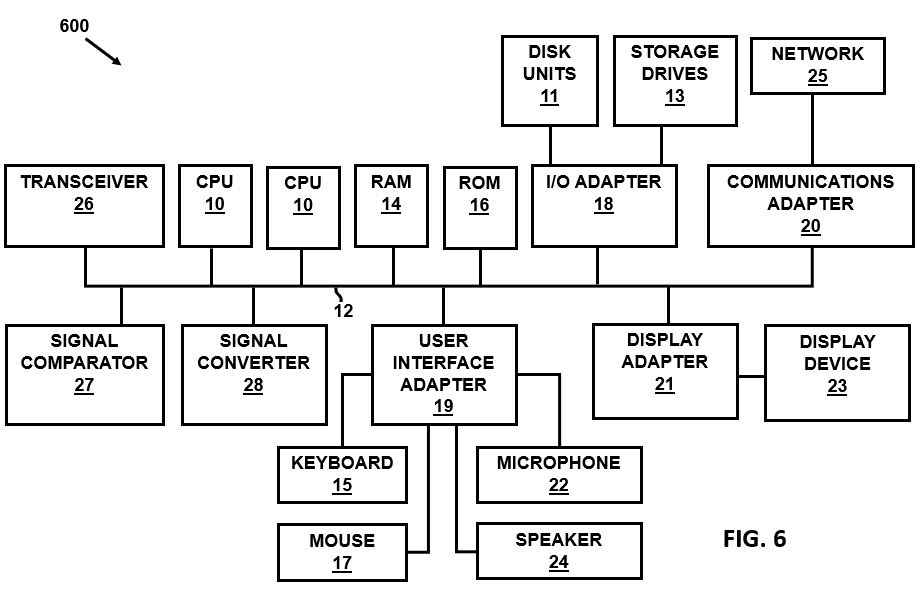

FIG. 6 illustrates a representative hardware environment for practicing various embodiments.

The embodiments herein and the various features and advantageous details thereof are explained more fully with reference to the non-limiting embodiments that are illustrated in the accompanying drawings and detailed in the following description. Descriptions of well-known components are omitted so as to not unnecessarily obscure the embodiments herein. The examples used herein are intended merely to facilitate an understanding of ways in which the embodiments herein may be practiced and to further enable those of skill in the art to practice the embodiments herein. Accordingly, the examples should not be construed as limiting the scope of the embodiments herein.

In the following detailed description, reference is made to the accompanying drawings which form a part hereof, and in which is shown by way of illustration specific embodiments in which the embodiments herein may be practiced. These embodiments, which are also referred to herein as “examples,” are described in sufficient detail to enable those skilled in the art to practice the embodiments herein, and it is to be understood that the embodiments may be combined, or that other embodiments may be utilized and that structural, logical, and electrical changes may be made without departing from the scope of the embodiments herein.

Referring now to FIGS 1 and 2, there is illustrated a system architecture of an agentic AI-based service platform 100 configured for autonomous enterprise workflow execution across multiple domains. The platform 100 comprises a plurality of integrated components including an autonomous agent layer 102, a task orchestration module 104, a model selection engine 106, a model repository 108, a workflow configuration database 110, an enterprise integration layer 112, an API gateway interface 114, a logging and traceability module 116, and a workflow execution controller 118. The components are operatively interconnected to facilitate decomposition, allocation, execution, and monitoring of domain-specific tasks without requiring human intervention.

The autonomous agent layer 102 is comprised of a set of software-defined agents such as 120a and 120b, each implemented as an independent runtime container or process. In embodiments, illustrated in the FIGS. 1 and 2, only two such agents are shown, however, there can be more than two or only one agent in the autonomous agent layer 102. Each agent 120a and 120b is provisioned with a local task execution engine 302, an in-memory contextual state manager 304, a model invocation interface 306, and an input-output normalization sub-module 308. These sub-components are shown and further discussed in conjunction with FIG. 3. The agents collectively referred to as agents 120 are stateless between workflow instances but preserve a transient execution context during runtime. Each agent 120 receives an input in a structured data format comprising a task identifier, input type, payload content, and metadata. The agent 120 processes the input by invoking one or more AI models 122 via the model interface 124 and returns an output payload, which includes result codes, action results, and routing references for subsequent task nodes. In failure scenarios, the agents 120 are configured with a fallback policy for either re-attempting execution using alternative models or escalating to a predefined handler.

The task orchestration module 104 is adapted to parse workflow specifications retrieved from the workflow configuration database 105. Each workflow may be defined as a directed acyclic graph (DAG) wherein nodes represent executable tasks and edges represent task dependencies. The workflow may be stored as a computer executable file, in various embodiments. The orchestration module 104 instantiates the agents 120 for each task node and manages their lifecycle through a task state machine incorporating states such as ready, executing, completed, and failed. It further supports dynamic propagation of context across the nodes and applies timeout and retry policies per node configuration.

The model selection engine 106 functions to dynamically determine the most appropriate AI model from among the various available AI models 122 to be used for a given agent task. The selection process takes into consideration factors such as a semantic type of the task (e.g., classification, reasoning, summarization), latency constraints, execution cost, system resource availability, and domain-specific relevance. The model selection engine 106 queries the model repository 108 and returns a model invocation profile comprising a selected model identifier, input prompt configuration, sampling parameters, and token limits.

The model selection engine 106 may be configured to determine, at runtime, a suitable AI model for performing a given task node assigned to an agent. The determination may be based on one or more model selection criteria, including the semantic classification of the task (such as summarization, classification, or extraction), latency constraints imposed by the workflow, computational resource availability, execution cost, historical performance, and domain-specific suitability. The model selection engine 106 may issue a query to the model repository 108 and receive in response a model invocation profile containing parameters such as the model identifier, the associated prompt formatting requirements, token sampling configurations, temperature values, and maximum token limits.

The model repository 104 maintains indexed references to the AI models 122 available to the platform 100. The AI models 122 may include general-purpose large language models, lightweight distilled language models, multimodal transformers capable of handling text and visual inputs, and specialized models optimized for domain-specific tasks, and the like without limitations. The model repository 104 stores metadata for each model including version identifiers, performance metrics, API endpoints or container addresses, deployment locality (e.g., cloud or edge), and ordered fallback chains for redundancy, without limitations.

The model repository 108 may serve as a versioned and indexed data store for managing the available AI models 122 within the service platform 100. In one embodiment, the model repository 108 may catalog large general-purpose language models, lightweight distilled models, multimodal models capable of processing both textual and visual input, and task-specific models optimized for domain-specific applications such as healthcare, finance, logistics, or legal compliance. Each model 122 stored in the model repository 108 may be annotated with metadata, including a version identifier, performance metrics under various workload conditions, inference endpoints or container addresses, hardware compatibility specifications, and deployment locality, including whether the AI model 122 is hosted on a cloud service, edge server, or local appliance. The model repository 108 may additionally maintain fallback hierarchies such that if a selected model fails or underperforms, an alternate model as discussed later may be invoked without requiring manual intervention.

The workflow configuration database 110 provides persistent storage for a plurality of computer executable workflow templates that may be expressed in structured schema formats such as JSON or YAML, and the like. Each such template may include a workflow identifier, a set of task nodes, their interdependencies, associated agents, preferred model types, execution constraints, and error handling directives and the like. The workflow definitions are version-controlled to support auditability and iterative refinement.

The workflow configuration database 110 may be configured to store the plurality of computer-executable workflow definitions that describe enterprise-specific business logic in a structured format. Each workflow definition may comprise a unique identifier, a directed set of task nodes, defined interdependencies among those nodes, and metadata describing agent associations, task constraints, preferred AI models, and fault-tolerance mechanisms. The workflow definitions may be version-controlled, enabling rollback and change tracking to ensure auditability and facilitate iterative refinement by enterprise administrators or platform operators.

The enterprise integration layer or simply integration layer 112 may provide a bidirectional data connectivity between the service platform 100 and external enterprise systems 122 such as electronic health records (EHRs), enterprise resource planning (ERP) systems, and financial software, and the like without limitations. The integration layer 112 supports multiple communication protocols including REST, gRPC, WebSocket, and asynchronous messaging queues. The integration layer 112 handles authentication, authorization, data format transformation, and error recovery mechanisms to ensure reliable data exchange.

The integration layer 112 may be operatively coupled to external enterprise systems 122 and configured to provide bidirectional data exchange between the AI-based service platform 100 and the third-party software systems, including electronic health records (EHR) platforms, enterprise resource planning (ERP) systems, customer relationship management (CRM) systems, and financial compliance software. The integration layer 112 may support synchronous and asynchronous communication protocols such as REST, gRPC, WebSocket, and message queue-based interfaces, and may implement middleware services to manage authentication, authorization, protocol translation, schema adaptation, and error recovery. In certain embodiments, the integration layer 112 may enforce access control via token-based mechanisms and ensure that data exchanged conforms to enterprise-specific schemas and compliance constraints.

The API gateway interface 114 exposes a secure ingress and egress point for the external enterprise systems 122 to initiate workflow executions and receive results. The API gateway interface 114 supports multi-tenant request routing, access control via role-based authentication, rate limiting, and API key lifecycle management.

The API gateway interface 114 may expose a secure interface by which the external enterprise systems 122 can initiate workflow executions and retrieve the corresponding results. The API gateway interface 114 may support multi-tenancy by partitioning requests according to organizational identifiers or API credentials, and may apply routing rules, usage limits, and access policies according to the privileges associated with each tenant. In some embodiments, the API gateway interface 114 may also perform request validation, payload inspection, and rate-limiting to prevent denial-of-service or misuse.

The logging and traceability module 116 ensures system observability by recording detailed execution traces for each workflow. Logged data may include agent instantiation timestamps, task execution durations, input-output payloads (subject to privacy redaction), model invocation logs, and system errors. Logs may be stored in append-only, time-sequenced databases for audit compliance and debugging purposes.

The logging and traceability module 116 may be configured to record, in an immutable and append-only manner, the execution history of each workflow, including timestamps associated with agent instantiation, duration of task execution, input and output payloads (with sensitive information redacted or encrypted), model invocation records, and system errors. These logs may be stored in a time-series database or distributed file system, allowing retrospective analysis, security audits, debugging, or compliance certification. In certain embodiments, the logging traceability module 116 may assign unique trace identifiers to each workflow instance, enabling end-to-end visibility into the data flow and execution state.

The workflow execution controller 118 serves as a runtime coordinator responsible for enforcing execution flow across the agents 120, managing task dependencies, invoking the model selection engine 106, and interfacing with the integration layer 112 for output dispatch. The workflow execution controller 118 monitors agent health through heartbeat signals and reassigns tasks in case of agent failure or timeout conditions. The workflow execution controller 118 maintains a central state of the workflow instance and ensures compliance with execution policies configured per workflow definition.

The workflow execution controller 118 may act as a central coordinator that oversees the instantiation, execution, and completion of workflows. The workflow execution controller 118 may communicate with the task orchestration module 104, the agent layer 102, the model selection engine 106, and the integration layer 112 to maintain a coherent runtime environment. The workflow execution controller 118 may monitor the health of the individual agents 120 through heartbeat signals and may reassign or restart tasks in the event of failure, timeout, or degraded performance. The workflow execution controller 118 may also enforce global execution policies, including concurrency limits, task prioritization, and exception handling logic, based on workflow-specific constraints defined in the configuration database.

In further embodiments, the service platform 100 may comprise an agent protocol layer 126 operatively coupled to the autonomous agent layer 102, the task orchestration module 104, and the workflow execution controller 118. The agent protocol layer 126 may be configured to implement standardized communication protocols between the agents 120 and other platform components. The agent protocol layer 126 may support inter-agent communication standards such as Model Context Protocol (MCP) and Agent-to-Agent (A2A) protocol specifications. In some embodiments, the agent protocol layer 126 may include subsystems for agent discovery, message routing, schema translation, and protocol-level fault handling, thereby enabling loosely coupled coordination between various heterogeneous agents such as the agents 120 across distributed infrastructure.

In some embodiments, the platform 100 may further comprise a security and governance module 128 communicatively linked to the logging and traceability module 116 and the workflow execution controller 118. The security and governance module 128 may be configured to enforce compliance constraints, access policies, and auditing requirements associated with autonomous execution of workflows. The security and governance module 128 may maintain traceable execution logs that include structured justification metadata for actions performed by the agents 120, including decision rationale, model selection identifiers, and threshold values applied during model inference. The security and governance module 128 may further comprise a delegation controller configured to assign task-level decision boundaries between the autonomous agents 120 and human operators based on sensitivity, regulatory classification, or organizational policy. In certain embodiments, the security and governance module 128 may support rule-based triggers for halting or rerouting workflows upon detection of predefined anomalies or risk thresholds.

The service platform 100 may also include a supervisory agent 130, distinct from the agents 120, which may be instantiated as a persistent meta-agent process within the workflow execution controller 118 or as a standalone runtime. The supervisory agent 130 may monitor operational status, behavioral consistency, and output coherence of the agents 120 during multi-agent workflow execution. In some embodiments, the supervisory agent 130 may perform conflict detection and resolution across concurrent agent outputs, initiate corrective actions including re-invocation or rollback of specific task nodes, and escalate control to human reviewers when decision ambiguity or elevated risk is detected. The supervisory agent 130 may interface with the agent protocol layer 126 and the task orchestration module 104 to dynamically adjust execution flows or inject constraints into agent 120 behavior in real time.

In some embodiments, the service platform 100 may comprise an agent memory and learning module 132 operatively coupled to the agents 120, the workflow execution controller 118, and the model repository 108. The agent memory and learning module 132 may maintain episodic memory stores associated with agent task histories, contextual data, and corrective feedback from prior executions. The agent memory and learning module 132 may be configured to persist selected execution artifacts across workflow instances to enable longitudinal learning and behavioral refinement. In some embodiments, the agent memory and learning module 132 may include reinforcement learning logic for updating task execution policies or model selection preferences based on reward feedback or observed success rates. The agent memory and learning module 132 may further support the construction or augmentation of structured knowledge graphs for specific enterprise domains.

In some embodiments, the service platform 100 may include a hybrid reasoning engine 134 configured to provide symbolic rule enforcement and logical constraint resolution in conjunction with probabilistic outputs from the AI models 122. The hybrid reasoning engine 134 may be communicatively linked to the model selection engine 106 and the agents 120, and may be invoked when a workflow node includes explicit reasoning requirements or rule validation logic. In such cases, the hybrid reasoning engine 134 may perform post-inference validation of agent-generated outputs against predefined symbolic schemas or regulatory knowledge bases. In some embodiments, the hybrid reasoning engine 134 may guide model prompt construction or sampling behavior based on ontological relationships and domain logic constraints, thereby improving task outcome reliability in high-assurance contexts.

In combination, the components described in FIGS. 1 and 2 operate to enable a robust, scalable, and autonomous AI platform 100 capable of full-cycle task execution within different kinds of enterprise settings. Each component may be designed to function independently yet interoperably, thus facilitating modular upgrades, horizontal scaling, and domain-specific customization.

In certain embodiments, each of the autonomous agent 120a and 120b described in conjunction with the autonomous agent layer 102 of FIGS. 1 and 2, may be instantiated within a dedicated runtime environment that provides operational isolation and computational independence. The agents 120 may be configured to operate as stateless entities across workflow instances, wherein no persistent memory is retained once the workflow concludes. However, during the execution of a given workflow instance, the agents 120 may maintain a transient execution context within an in-memory store, which may be used to preserve task-specific information such as intermediate processing results, routing directives, error flags, or model invocation outcomes. The transient state may be discarded upon completion or failure of the workflow instance.

In certain embodiments, the service platform 100 may further comprise an agent interoperability interface layer 222 configured to enable secure, standards-compliant communication between the autonomous agents 120 operating across different runtime environments, vendor platforms, or organizational domains. The agent interoperability interface layer 222 may implement an open protocol, such as the Agent2Agent (A2A) protocol, to support structured interaction between a local client agent and one or more remote agents, including but not limited to third-party hosted agents. The agent interoperability interface layer 222 may integrate with the task orchestration module 104 and the workflow execution controller 118 to enable discovery, delegation, and execution of cross-agent tasks through protocol-compliant exchanges.

The agent interoperability interface layer 222 may be configured to retrieve and parse standardized capability descriptors, such as agent cards, from registered remote agents. Each agent card may be formatted as a JSON object comprising metadata fields including task types supported, input/output modalities, execution time characteristics, and authentication requirements. The agent interoperability interface layer 222 may interact with a federated agent card registry (not separately shown) or directly query peer agents using predefined discovery endpoints. The workflow execution controller 118 may dynamically delegate sub-tasks to remote agents based on fitness criteria such as specialization, availability, or enterprise trust ranking based on discovered capabilities.

In various embodiments, the agent interoperability interface layer 222 may support a protocol-compliant task object definition and lifecycle state machine. Each task object may encapsulate metadata describing a unit of work including task identifiers, input payload, execution context, and deadline constraints. The workflow execution controller 118 may coordinate task handoff between a local agent 120 acting as a client and one or more remote agents via the interoperability layer 422. Upon task completion, the remote agent may return an output artifact, optionally including structured outputs, intermediate states, and explanations. The task lifecycle management may support long-running tasks, partial responses, and real-time progress updates.

The agent interoperability interface layer 222 may further be configured to mediate message formats between heterogeneous agents 120. Each message exchanged via the interoperability layer 222 may contain one or more parts, wherein each part comprises a distinct content type such as text, structured data, image, audio, or video.

Each of the agents 120 may further include an internal architecture including the task execution engine 302 alternatively referred to as the local task execution engine 302 without limitations (as shown in FIG. 3) adapted to parse and perform task-specific operations. Referring now to FIG. 3 in conjunction with FIGS. 1 and 2, the service platform 100, and in particular the agent 120a, is further described herein. The internal architecture of the agent 120a may further include the model invocation interface 306 for accessing external or internal AI models together referred to as the AI models 122, a data normalization module 308 alternatively referred to as the input-output normalization sub-module 308 configured to convert incoming and outgoing payloads into canonical formats for interoperability, and a context manager 304 alternatively referred to as the in-memory contextual state manager 304 without limitations for caching runtime metadata. In certain embodiments, the agent 120a may be equipped with a fallback logic 310 that triggers an alternate processing path in response to defined error conditions. The fallback logic 310 may include re-invoking the same task with an alternative AI model, escalating the task to a supervisory agent, or returning a structured failure response to the task orchestration module 104.

In various embodiments, the agents 120, including but not limited to the agent 120a, may be initialized dynamically in response to a workflow execution event triggered by the task orchestration module 104. In embodiments, initialization parameters may be derived from workflow-specific metadata stored in the workflow configuration database 110, including task-specific constraints, resource budgets, and performance targets. Upon instantiation, the agents 120 may configure their internal components including the task execution engine 302, the in-memory contextual state manager 304, the model invocation interface 306, and the input-output normalization sub-module 308 to align with the designated task node requirements.

In some implementations, the task execution engine 302 may be configured to support multiple execution modes including synchronous, asynchronous, and batch processing. The execution mode may be determined at runtime based on parameters embedded within the input payload received from the task orchestration module 104. For example, when the payload indicates a time-sensitive classification task, the task execution engine 302 may prioritize low-latency execution paths, whereas for compute-intensive reasoning tasks, the task execution engine 302 may defer to background processing modes using task queues.

The in-memory contextual state manager 304 may be further configured to cache task-specific variables, routing directives, and model selection feedback within a temporal memory space. In some embodiments, any contextual information retained by the in-memory contextual state manager 304 may be passed forward to downstream agents from among the agents 120 through the task orchestration module 104, thereby enabling inter-agent context propagation across a workflow. The contextual data may be purged automatically upon task completion, failure, or reassignment to preserve stateless behavior across workflow instances, while allowing temporal consistency during active execution.

The model invocation interface 306 may support invocation protocols compatible with a plurality of model endpoints hosted internally or externally to the platform 100. In one embodiment, the model invocation interface 306 may construct an execution request comprising a structured input, model identifier, sampling configuration, and token limits, as received from the model selection engine 106. After invocation, the model invocation interface 306 may asynchronously await model inference outputs and implement timeout thresholds and retry logic governed by execution policies defined within the workflow configuration database 110.

In accordance with certain embodiments, the input-output normalization sub-module 308 may include rule-based or schema-driven transformers 312 for aligning incoming data formats with a canonical input schema expected by the AI models 122. Similarly, the output normalization performed by the normalization sub-module 308 may ensure that the results returned from the AI models 122 conform to a structured response format, including result codes, actionable outcomes, and next-hop routing instructions. Such normalization may facilitate consistent downstream processing by other agents 120 or system modules such as the workflow execution controller 118.

The fallback logic 310, as integrated within the agent 120a, may include a policy engine 314 capable of evaluating error conditions such as inference failures, unacceptable confidence scores, or timeout expirations. Based on such evaluation, the fallback logic 310 may select a predefined recovery strategy, which may include re-attempting the same task with an alternative model retrieved from the model repository 108, escalating the task to a supervisory agent (not separately illustrated), or generating a structured failure payload for routing back to the task orchestration module 104. These strategies may be hierarchically organized and prioritized based on configuration rules encoded within the workflow definition.

In an embodiment, the agents 120 may include logging hooks to the logging and traceability module 116 for emitting execution telemetry including agent lifecycle events, task success or failure status, model invocation latencies, and anomaly flags. These hooks may be conditionally activated based on workflow-level observability requirements, enabling selective tracing and debugging without incurring unnecessary overhead. These trace logs may be indexed using workflow instance identifiers to support retrospective audits and root cause analysis.

It is to be appreciated that while the internal architecture of the agents 120 has been described with reference to specific sub-components including the task execution engine 302, the contextual state manager 304, the model invocation interface 306, and the normalization sub-module 308, among various other components, the specific arrangement and interaction of these sub-components may vary across implementations without departing from the scope and spirit of the autonomous agent layer 102 as illustrated in FIGS. 1–3. This modular architecture enables selective customization, optimization, or replacement of individual sub-components in accordance with workload demands, deployment environments, or compliance requirements.

FIG. 4 illustrates various components of the task orchestration module 104. The task orchestration module 104 may comprise a workflow execution engine 402 adapted to interpret machine-readable workflow templates retrieved from the workflow configuration database 110. The workflow templates may be expressed in structured data formats such as JSON, YAML, or equivalent, and may define a directed acyclic graph of the task nodes and associated dependencies. Each task node may reference an assigned agent, input-output constraints, preferred models, execution timeouts, and retry policies. The task orchestration module 402 may instantiate the agents 120 on a per-task basis and may monitor their execution state using a finite state machine, with execution states including ready, executing, completed, failed, or retried. The task orchestration module 104 may propagate context dynamically across a task graph, enabling the downstream agents 120 to reference upstream decisions or metadata.

In some embodiments, and as further illustrated in FIG. 4, the task orchestration module 104 may further comprise a task dependency manager 404 configured to evaluate and manage inter-task dependencies within the workflow definition. The task dependency manager 404 may analyze the directed acyclic graph structure of the workflow to identify upstream and downstream relationships between the task nodes. After identification of dependency constraints, the task dependency manager 404 may enforce execution sequencing, such that a downstream task node is only instantiated once all its parent nodes have reached a terminal state including completed or failed. The task dependency manager 404 may also be operatively coupled to the workflow execution engine 402 to resolve dynamic branching or conditional task paths based on runtime decision logic encoded within the workflow template.

The task orchestration module 104 may further include a node state registry 406 configured to maintain a persistent or in-memory mapping of execution states of the task nodes associated with a given workflow instance. The node state registry 406 may be updated in real time as the agents 120 report status transitions, such as from ready to executing or from executing to completed. The node state registry 406 may expose an interface for querying current and historical task states, which may be utilized by the workflow execution engine 402, the workflow execution controller 118, or external monitoring tools for traceability and audit purposes.

In accordance with some embodiments, the task orchestration module 104 may further comprise a task retry manager 408 configured to enforce retry policies 410 on failed or incomplete task executions. The task retry manager 408 may access policy definitions embedded within the workflow template retrieved from the workflow configuration database 110, including maximum retry counts, retry intervals, and escalation directives. Upon detecting a task failure as logged in the node state registry 406, the task retry manager 408 may schedule a retry event using a delay queue, and may optionally adjust invocation parameters such as input payload adjustments or alternate model selections via coordination with the model selection engine 106.

The task orchestration module 104 may also include a context propagation engine 412 configured to enable inter-task communication and shared data flow across the task nodes. The context propagation engine 412 may receive context objects generated by the agents 120 upon completion of their assigned tasks and may propagate relevant data fields to the downstream nodes in accordance with context propagation rules 414 defined in the workflow template. The context propagation engine 412 may support selective inheritance of variables, context isolation scopes, and transformation of data fields to comply with expected input formats of the agents 120.

In some configurations, the task orchestration module 104 may further include a task routing manager 416, which may be responsible for determining the appropriate agent 120 to which a given task node should be assigned. The task routing manager 416 may evaluate factors including agent workload distribution, execution history, agent specialization, and proximity to data sources (e.g., local vs. cloud-based agents). In certain embodiments, the task routing manager 416 may coordinate with the workflow execution controller 118 to reassign or rebalance tasks in response to agent failure or latency threshold violations.

The task orchestration module 104 may optionally include a temporal execution coordinator 418 configured to enforce task-level timing constraints, including start-time windows, maximum execution durations, and synchronization delays between sequential tasks. The temporal execution coordinator 418 may maintain a temporal schedule per workflow instance and may raise alerts or invoke compensatory workflows when timing deviations occur. In some embodiments, the temporal execution coordinator 418 may interoperate with external scheduling systems or enterprise calendars integrated via the enterprise integration layer 112.

In some embodiments, the task orchestration module 104 may include a workflow checkpoint manager 420 configured to capture intermediate workflow states at predefined checkpoints. The workflow checkpoint manager 420 may serialize a current task graph, node states, and agent contexts into a checkpoint record stored within the workflow configuration database 110 or an auxiliary storage system. These checkpoint records may enable rollback or resumption of workflow execution in case of system failure, manual intervention, or migration between computing environments.

It is to be appreciated that each of the components described herein as part of the task orchestration module 104, including but not limited to the workflow execution engine 402, the task dependency manager 404, the node state registry 406, the task retry manager 408, the context propagation engine 412, the task routing manager 416, the temporal execution coordinator 418, and the workflow checkpoint manager 420, and others without limitations may operate as discrete software modules, microservices, or internal subroutines, and may be distributed across multiple runtime environments without departing from the scope of the system 100. The task orchestration module 104 may be configured to scale horizontally to support concurrent execution of multiple workflows, while preserving logical consistency and runtime determinism of each workflow instance.

In some embodiments, the platform 100 may further include a domain-specific agent library comprising preconfigured agent templates that may be optimized for enterprise verticals such as clinical research, financial compliance, legal drafting, or logistics routing. The agent templates may encapsulate reusable logic, such as prompt structures, preferred model bindings, and output formatting rules, thereby enabling faster deployment and contextual alignment. The service platform 100 may support maturity-aware execution modes, wherein the agents 120 can operate in suggestion-only, guardrail-constrained, or fully autonomous modes depending on organizational readiness and risk tolerance.

Accordingly, the above embodiments and configurations describe a system that is modular, fault-tolerant, and capable of end-to-end task execution in a fully automated, agent-driven manner, thereby advancing the state of enterprise automation well beyond existing SaaS models or decision-support utilities.

Referring now to FIG. 5, there is illustrated a detailed runtime execution and data flow architecture of the agentic AI-based service platform 100. As depicted, the architecture of the service platform 100 may incorporate a plurality of logically and functionally distinct modules, including the workflow execution controller 118, the task orchestration module 104, the logging and traceability module 116, the enterprise integration layer 112, the model selection engine 106, the model repository 108, and the runtime agents 120 instantiated from the autonomous agent layer 102, as previously described in conjunction with FIGS. 1–4.

The workflow execution controller 118 may be configured to receive structured workflow initiation requests from the API gateway interface 114. Each request may include a workflow identifier, organizational credentials, and an initial payload. Upon receipt, the workflow execution controller 118 initiates a corresponding workflow instance and allocates runtime context memory scoped to the execution of that instance. The workflow execution controller 118 invokes the task orchestration module 104 to parse the workflow template retrieved from the workflow configuration database 110 and to decompose it into an executable task graph.

The task orchestration module 104, including components such as the workflow execution engine 402 and the node state registry 406, generates a directed acyclic graph (DAG) representation of the task nodes and respective dependencies. The task orchestration module 104 then assigns task nodes to eligible agents 120 based on evaluation performed by the task routing manager 416. Task execution directives, including model selection criteria, timeouts, and retry policies, may be transmitted to the agents 120, which may be dynamically instantiated or selected from a pool of pre-warmed containers.

Each of the agents 120, once provisioned, retrieves its execution parameters, normalizes input using the input-output normalization sub-module 308, and invokes the model selection engine 106 to determine an optimal AI model from the AI models 122. The model selection engine 106 consults the model repository 108, which returns a model invocation profile including the model identifier, sampling configuration, token constraints, and endpoint address. The agent such as from the agents 120 then communicates with the designated AI model 122, potentially hosted externally or internally, to generate inference results.

The inference results and task outputs generated by the agent 120 may be returned to the task orchestration module 104 and are concurrently transmitted to the logging and traceability module 116. The logging and traceability module 116 records structured logs comprising execution timestamps, model latencies, agent identifiers, result codes, and trace metadata. The logs may be stored in a persistent, append-only log database and may be indexed by workflow instance identifier to support downstream analysis, audit, or troubleshooting.

In embodiments, processed results from terminal task nodes may be routed by the workflow execution controller 118 to the enterprise integration layer 112, which acts as a secure output dispatch interface to the enterprise systems 122. These systems may include, without limitation, electronic health record (EHR) systems, enterprise resource planning (ERP) systems, financial software, and third-party APIs. The integration layer 112 may perform data transformation, protocol adaptation, and authentication management prior to dispatch. Various forms of responses from the enterprise systems 122 may also be received by the integration layer 112 and, if required, may be routed back to the agent 120 or the orchestration module 104 for feedback-based task chaining.

In some embodiments, the workflow execution controller 118 may support an internal job coordination loop for monitoring the progress of active workflows. As illustrated in FIG. 5, the workflow execution controller 118 may track the runtime lifecycle of each task, polling the node state registry 406 and invoking the temporal execution coordinator 418 to enforce timing constraints. Failure cases are delegated to the task retry manager 408, which issues retry attempts or raises escalation alerts.

The data flows shown in FIG. 5 illustrate both forward execution paths (from the API gateway interface 114 to the enterprise systems 122) and reverse feedback loops (from the enterprise system responses or failure events to the task orchestration module 104 and the agents 120). Each data transfer between modules is annotated with structured payloads, including model inputs, agent directives, context metadata, and execution logs. All modules operate asynchronously, and message queues or APIs may be used to decouple inter-module communication in production deployments.

In combination, the architecture of FIG. 5 illustrates a cohesive, modular runtime system wherein the workflow execution controller 118 acts as a central coordinator, the task orchestration module 104 performs task graph decomposition and agent allocation, the agents 120 execute model-driven logic, and the logging, integration, and model components interact to ensure reliability, observability, and external system compatibility. The structure illustrated in FIG. 5 supports scalability, resilience, and low-latency operation across a wide range of enterprise automation use cases.

In embodiments, the service platform 100 may be configured to instantiate and manage a hybrid AI-human workforce environment also referred to as AI workforce simply, wherein the autonomous agents 120 and human operators function as interoperable participants within a unified execution framework. Each of the agents 120 may be operatively assigned to perform structured, repeatable, and data-intensive tasks, while supervisory actions, escalation handling, and policy validation may remain assigned to designated human stakeholders via defined delegation protocols stored in the workflow configuration database 110. The workflow execution controller 118 may implement a hybrid control plane comprising both agent-defined automation logic and human-in-the-loop intervention checkpoints, allowing dynamic redistribution of task responsibility in response to contextual risk, policy classification, or operator preference.

In various embodiments, the service platform 100 may further operate as the foundational infrastructure for deploying cognitive enterprise capabilities, wherein the agents 120 persistently learn and refine their behavior over time through repeated workflow executions and feedback integration via the agent memory and learning module 132. The service platform 100 may support a distributed model of operational intelligence in which the agents 120 collaborate, coordinate, and share context via the agent protocol layer 126 and the supervisory agent 130. Such orchestration enables realization of a platform-mediated AI workforce that spans multiple enterprise domains such as but not limited to operations, finance, compliance, and customer service, while maintaining traceable accountability and autonomous execution continuity.

In an embodiment, the service platform 100 may be provisioned as a core digital workforce fabric enabling organizations to scale intelligent task execution without proportional increases in human headcount. The autonomous agents 120, in conjunction with the hybrid reasoning engine 134 and the model selection engine 106, may execute high-complexity enterprise workflows with minimal human oversight while ensuring auditability, explainability, and compliance enforcement through the security and governance module 128. Over time, the service platform 100 may support an emergence of enterprise-level self-regulating agent ecosystems, wherein fully orchestrated agent collectives autonomously operate and collaborate with business units or functional processes under policy-defined boundaries, enabling executive-level management of hybrid human-AI organizational models.

In some embodiments, the service platform 100 may comprise infrastructure components configured to support high-throughput agentic AI operations by leveraging elastic computing resources and distributed data architecture. Execution of the autonomous agents 120 may be facilitated through integration with cloud-based compute fabrics (e.g., virtualized GPU/TPU instances), enabling horizontal scaling and low-latency inference. The workflow execution controller 118 may dynamically allocate workloads across compute tiers based on complexity and execution priority. To support semantic reasoning, personalization, or recommendation tasks, one or more vector databases (e.g., Pinecone, Milvus) may be interfaced with the service platform 100 through the integration layer 112, enabling real-time embedding retrieval and similarity-based querying by the one or more agents 120 during runtime execution.

In embodiments, the service platform 100 may incorporate a resilient integration framework enabling the autonomous agents 120 to seamlessly interoperate with heterogeneous enterprise systems through use of microservices, messaging queues, and programmable APIs. For example, the integration layer 112 may mediate asynchronous communication between the agents 120 and the external systems using middleware protocols (e.g., Apache Kafka, RabbitMQ) while preserving execution order, data consistency, and fault tolerance. The use of microservice-based connectors permits isolated scaling, version control, and zero-downtime deployment of agent-facing services. In an embodiment, the agent protocol layer 126 may encapsulate endpoint discovery, data mapping, and transformation logic, thereby decoupling agent behavior from underlying system evolution and ensuring persistent inter-agent and cross-system operability during long-running workflows.

In certain embodiments, the autonomous agent layer 102 may be further configured with an adaptive trust scoring mechanism that governs agent autonomy based on historical task performance metrics. Each agent from the agents 120 may maintain a trust score that may be dynamically updated as a function of observed accuracy, error frequency, and compliance with defined task constraints over a moving evaluation window. The task orchestration module 104 may leverage the trust score to determine permissible execution scopes per agent instance, such that agents with higher scores may be authorized to initiate direct actions on the external systems via the integration layer 112, whereas lower-scoring agents may be restricted to read-only or approval-required operations. The service platform 100 may enforce this autonomy gradient at runtime through a policy-enforced decision matrix.

In certain embodiments, the agents 120 may be provisioned with a fact-grounding module operatively coupled to a vetted knowledge base or retrieval-augmented generation (RAG) pipeline. Prior to output payload generation, the agents 120 may be configured to resolve intermediate inference results against structured reference datasets. If the resolution confidence score falls below a predefined threshold, the agents 120 may flag the output for human review or route it through a fallback logic path. This subsystem operates in conjunction with the logging and traceability module 116, which may record the provenance and confidence metadata associated with each resolved output for auditability.

In various embodiments, the integration layer 112 may include perform policy-aware data routing for dynamically evaluating access requests initiated by the agents 120. This may allow schema-aware redaction or pseudonymization of data fields based on agent privilege levels and task context. Various access control rules may be defined in an enterprise-wide data governance policy store, and evaluated using runtime authorization tokens associated with an initiating agent instance. This approach may ensure data minimization, privacy compliance, and controlled exposure of sensitive enterprise information.

In certain implementations, the enterprise integration layer 112 may further comprise a set of translator agents or adapter modules (not shown) that may enable semantic interoperability between the autonomous agents 120 and legacy enterprise systems. Each adapter module may be encapsulated as a dynamically loadable plug-in and registered in an adapter registry accessible by the workflow execution controller 118. At runtime, when the agents 120 require access to a legacy system with non-standard interface formats, the corresponding adapter is invoked to transform the agent’s output into a system-compliant command, thereby decoupling agent logic from back-end legacy interface constraints.

The workflow execution controller 118 may further be configured to assign a reliability score to each of the agents 120 based on predefined validation metrics, including adherence to expected response formats, consistency in inference quality, and conformance to task completion SLAs. The reliability score may be updated continuously using feedback loops incorporating human evaluations, test result differentials, and anomaly detection logs. Based on this score, the controller 118 may enforce lifecycle control policies such as task reallocation, agent sandboxing, or invocation of compensating routines in case of critical threshold violations. Each such action may be traceably recorded in the logging and traceability module 116 for compliance verification.

The various embodiments herein with respect to the service platform 100 provides an agentic AI-based enterprise platform that enables autonomous task execution across distributed enterprise systems using modular AI agents such as the agents 120. The platform 100 comprises a layered system architecture including the autonomous agent layer 102, task orchestration module 104, model selection engine 106, various integration interfaces, logging infrastructure, and the workflow execution controller 118, among various other components. Each AI agent 120 operates as a stateless container with internal subsystems for task execution, contextual memory, AI model invocation, and output normalization. Workflows may be defined as directed acyclic graphs and dynamically instantiated by the task orchestration module 104 based on enterprise-defined templates. In embodiments, the platform 100 supports deployment in compliance-sensitive domains and enables scalable hybrid AI-human task execution strategies.

The various components described herein and/or illustrated in the figures may be embodied as hardware-enabled modules and may be a plurality of overlapping or independent electronic circuits, devices, and discrete elements packaged onto a circuit board to provide data and signal processing functionality within a computer. An example might be a comparator, inverter, or flip-flop, which could include a plurality of transistors and other supporting devices and circuit elements. The modules that include electronic circuits process computer logic instructions capable of providing digital and/or analog signals for performing various functions as described herein. The various functions can further be embodied and physically saved as any of data structures, data paths, data objects, data object models, object files, database components. For example, the data objects could include a digital packet of structured data. Example data structures may include any of an array, tuple, map, union, variant, set, graph, tree, node, and an object, which may be stored and retrieved by computer memory and may be managed by processors, compilers, and other computer hardware components. The data paths can be part of a computer CPU that performs operations and calculations as instructed by the computer logic instructions. The data paths could include digital electronic circuits, multipliers, registers, and buses capable of performing data processing operations and arithmetic operations (e.g., Add, Subtract, etc.), bitwise logical operations (AND, OR, XOR, etc.), bit shift operations (e.g., arithmetic, logical, rotate, etc.), complex operations (e.g., using single clock calculations, sequential calculations, iterative calculations, etc.). The data objects may be physical locations in computer memory and can be a variable, a data structure, or a function. Some examples of the modules include relational databases (e.g., such as Oracle® relational databases), and the data objects can be a table or column, for example. Other examples include specialized objects, distributed objects, object-oriented programming objects, and semantic web objects. The data object models can be an application programming interface for creating HyperText Markup Language (HTML) and Extensible Markup Language (XML) electronic documents. The models can be any of a tree, graph, container, list, map, queue, set, stack, and variations thereof, according to some examples. The data object files can be created by compilers and assemblers and contain generated binary code and data for a source file. The database components can include any of tables, indexes, views, stored procedures, and triggers.

In an example, the embodiments herein can provide a computer program product configured to include a pre-configured set of instructions, which when performed, can result in actions as stated in conjunction with various figures herein. In an example, the pre-configured set of instructions can be stored on a tangible non-transitory computer readable medium. In an example, the tangible non-transitory computer readable medium can be configured to include the set of instructions, which when performed by a device, can cause the device to perform acts similar to the ones described here.

The embodiments herein may also include tangible and/or non-transitory computer-readable storage media for carrying or having computer-executable instructions or data structures stored thereon. Such non-transitory computer readable storage media can be any available media that can be accessed by a general purpose or special purpose computer, including the functional design of any special purpose processor as discussed above.

By way of example, and not limitation, such non-transitory computer-readable media can include RAM, ROM, EEPROM, CD-ROM or other optical disk storage, magnetic disk storage or other magnetic storage devices, or any other medium which can be used to carry or store desired program code means in the form of computer-executable instructions, data structures, or processor chip design. When information is transferred or provided over a network or another communications connection (either hardwired, wireless, or combination thereof) to a computer, the computer properly views the connection as a computer-readable medium. Thus, any such connection is properly termed a computer-readable medium. Combinations of the above should also be included within the scope of the computer-readable media.

Computer-executable instructions include, for example, instructions and data which cause a special purpose computer or special purpose processing device to perform a certain function or group of functions. Computer-executable instructions also include program modules that are executed by computers in stand-alone or network environments. Generally, program modules include routines, programs, components, data structures, objects, and the functions inherent in the design of special-purpose processors, etc. that perform particular tasks or implement particular abstract data types. Computer-executable instructions, associated data structures, and program modules represent examples of the program code means for executing steps of the methods disclosed herein. The particular sequence of such executable instructions or associated data structures represents examples of corresponding acts for implementing the functions described in such steps.

The techniques provided by the embodiments herein may be implemented on an integrated circuit chip (not shown). The chip design is created in a graphical computer programming language, and stored in a computer storage medium (such as a disk, tape, physical hard drive, or virtual hard drive such as in a storage access network. If the designer does not fabricate chips or the photolithographic masks used to fabricate chips, the designer transmits the resulting design by physical means (e.g., by providing a copy of the storage medium storing the design) or electronically (e.g., through the Internet) to such entities, directly or indirectly. The stored design is then converted into the appropriate format (e.g., GDSII) for the fabrication of photolithographic masks, which typically include multiple copies of the chip design in question that are to be formed on a wafer. The photolithographic masks are utilized to define areas of the wafer (and/or the layers thereon) to be etched or otherwise processed.

The resulting integrated circuit chips can be distributed by the fabricator in raw wafer form (that is, as a single wafer that has multiple unpackaged chips), as a bare die, or in a packaged form. In the latter case the chip is mounted in a single chip package (such as a plastic carrier, with leads that are affixed to a motherboard or other higher level carrier) or in a multichip package (such as a ceramic carrier that has either or both surface interconnections or buried interconnections). In any case the chip is then integrated with other chips, discrete circuit elements, and/or other signal processing devices as part of either (a) an intermediate product, such as a motherboard, or (b) an end product. The end product can be any product that includes integrated circuit chips, ranging from toys and other low-end applications to advanced computer products having a display, a keyboard or other input device, and a central processor.

Furthermore, the embodiments herein can take the form of a computer program product accessible from a computer-usable or computer-readable medium providing program code for use by or in connection with a computer or any instruction execution system. For the purposes of this description, a computer-usable or computer readable medium can be any apparatus that can comprise, store, communicate, propagate, or transport the program for use by or in connection with the instruction execution system, apparatus, or device.

The medium can be an electronic, magnetic, optical, electromagnetic, infrared, or semiconductor system (or apparatus or device) or a propagation medium. Examples of a computer-readable medium include a semiconductor or solid-state memory, magnetic tape, a removable computer diskette, a random access memory (RAM), a read-only memory (ROM), a rigid magnetic disk and an optical disk. Current examples of optical disks include compact disk - read only memory (CD-ROM), compact disk - read/write (CD-R/W) and DVD.

A data processing system suitable for storing and/or executing program code will include at least one processor coupled directly or indirectly to memory elements through a system bus. The memory elements can include local memory employed during actual execution of the program code, bulk storage, and cache memories which provide temporary storage of at least some program code in order to reduce the number of times code must be retrieved from bulk storage during execution.

Input/output (I/O) devices (including but not limited to keyboards, displays, pointing devices, etc.) can be coupled to the system either directly or through intervening I/O controllers. Network adapters may also be coupled to the system to enable the data processing system to become coupled to other data processing systems or remote printers or storage devices through intervening private or public networks. Modems, cable modem and Ethernet cards are just a few of the currently available types of network adapters.

A representative hardware environment for practicing the embodiments herein is depicted in FIG. 6, with reference to FIGS. 1 through 5. This schematic drawing illustrates a hardware configuration of an information handling/computer system 600 in accordance with the embodiments herein.

The system 600 comprises at least one processor or central processing unit (CPU) 10. The CPUs 10 are interconnected via system bus 12 to various devices such as a random access memory (RAM) 14, read-only memory (ROM) 16, and an input/output (I/O) adapter 18. The I/O adapter 18 can connect to peripheral devices, such as disk units 11 and tape drives 13, or other program storage devices that are readable by the system. The system 900 can read the inventive instructions on the program storage devices and follow these instructions to execute the methodology of the embodiments herein. The system 900 further includes a user interface adapter 19 that connects a keyboard 15, mouse 17, speaker 24, microphone 22, and/or other user interface devices such as a touch screen device (not shown) to the bus 12 to gather user input. Additionally, a communication adapter 20 connects the bus 12 to a data processing network, and a display adapter 21 connects the bus 12 to a display device 23 which may be embodied as an output device such as a monitor, printer, or transmitter, for example. Further, a transceiver 26, a signal comparator 27, and a signal converter 28 may be connected with the bus 12 for processing, transmission, receipt, comparison, and conversion of electric or electronic signals.

The foregoing description of the specific embodiments will so fully reveal the general nature of the embodiments herein that others can, by applying current knowledge, readily modify and/or adapt for various applications such specific embodiments without departing from the generic concept, and, therefore, such adaptations and modifications should and are intended to be comprehended within the meaning and range of equivalents of the disclosed embodiments. It is to be understood that the phraseology or terminology employed herein is for the purpose of description and not of limitation. Therefore, while the embodiments herein have been described in terms of preferred embodiments, those skilled in the art will recognize that the embodiments herein can be practiced with modification within the spirit and scope of the present invention.